.svg)

- GraalVM for JDK 22 (Latest)

- GraalVM for JDK 23 (Early Access)

- GraalVM for JDK 21

- GraalVM for JDK 17

- Archives

- Dev Build

Polyglot Sandboxing

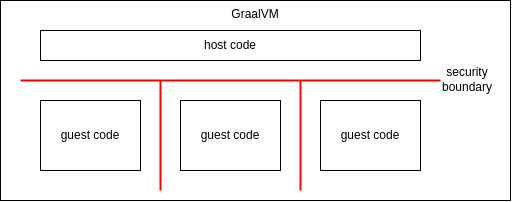

GraalVM allows a host application written in a JVM-based language to execute guest code written in Javascript via the Polyglot Embedding API. Configured with a sandbox policy, a security boundary between a host application and guest code can be established. For example, host code can execute untrusted guest code using the UNTRUSTED policy. Host code can also execute multiple mutually distrusting instances of guest code that will be protected from one another. Used this way, polyglot sandboxing supports a multi-tenant scenario:

Use cases that benefit from introducing a security boundary are:

- Usage of third party code, i.e., pulling in a dependency. Third party code is typically trusted and scanned for vulnerabilities before use, but sandboxing them is an additional precaution against supply-chain attacks.

- User plugins. Complex applications might allow users to install community-written plugins. Traditionally, those plugins are considered trusted and often run with full privileges, but ideally they should not be able to interfere with the application except when intended.

- Server scripting. Allowing users to customize a server application with their own logic expressed in a general-purpose scripting language, for example, to implement custom data processing on a shared data source.

Sandbox Policies #

Depending on the use case and the associated acceptable security risk, a SandboxPolicy can be chosen, ranging from TRUSTED to UNTRUSTED, enabling and configuring an increasing range of restrictions and mitigations.

A SandboxPolicy serves two purposes: preconfiguration and validation of the final configuration.

It preconfigures context and engine to comply to a policy by default.

In case the configuration is further customized, validation of the policy will ensure that the custom configuration does not unacceptably weaken the policy.

Trusted Policy #

The TRUSTED sandboxing policy is intended for guest code that is entirely trusted. This is the default mode. There are no restrictions to the context or engine configuration.

Example:

try (Context context = Context.newBuilder("js")

.sandbox(SandboxPolicy.TRUSTED)

.build();) {

context.eval("js", "print('Hello JavaScript!');");

}

Constrained Policy #

The CONSTRAINED sandboxing policy is intended for trusted applications whose access to host resources should be regulated. The CONSTRAINED policy:

- Requires the languages for a context to be set.

- Disallows native access.

- Disallows process creation.

- Disallows system exit, prohibiting the guest code from terminating the entire VM where this is supported by the language.

- Requires redirection of the standard output and error streams. This is to mitigate risks where external components, such as log processing, may be confused by unexpected writes to output streams by guest code.

- Disallows host file or socket access. Only custom polyglot file system implementations are allowed.

- Disallows environment access.

- Restricts host access:

- Disallows host class loading.

- Disallows to all public host classes and methods by default.

- Disallows access inheritance.

- Disallows implementation of arbitrary host classes and interfaces.

- Disallows implementation of

java.lang.FunctionalInterface. - Disallows host object mappings of mutable target types. The HostAccess.CONSTRAINED host access policy is preconfigured to fulfill the requirements for the CONSTRAINED sandboxing policy.

Example:

try (Context context = Context.newBuilder("js")

.sandbox(SandboxPolicy.CONSTRAINED)

.out(new ByteArrayOutputStream())

.err(new ByteArrayOutputStream())

.build()) {

context.eval("js", "print('Hello JavaScript!');");

}

Isolated Policy #

The ISOLATED sandboxing policy builds on top of the CONSTRAINED policy and is intended for trusted applications that may misbehave because of implementation bugs or processing of untrusted input. As the name already suggests, the ISOLATED policy enforces deeper isolation between host and guest code. In particular, guest code running with the ISOLATED policy will be executed in their own virtual machine, on a separate heap. This means that they no longer share runtime elements such as the JIT compiler or the garbage collector with the host application, making the host VM significantly more resilient against faults in the guest VM.

In addition to the restrictions of the CONSTRAINED policy, the ISOLATED policy:

- Requires method scoping to be enabled. This avoids cyclic dependencies between host and guest objects. The HostAccess.ISOLATED host access policy is preconfigured to fulfill the requirements for the ISOLATED sandboxing policy.

- Requires setting the maximum isolate heap size. This is the heap size that will be used by the guest VM. If the engine is shared by multiple contexts, execution of these contexts will share the isolate heap.

- Requires setting the host call stack headroom. This protects against host stack starving on upcalls to the host: the guest will be prohibited from performing an upcall if the remaining stack size drops below the specified value.

- Requires setting the maximum CPU time limit. This restricts the workload to execute within the given time frame.

Example:

try (Context context = Context.newBuilder("js")

.sandbox(SandboxPolicy.ISOLATED)

.out(new ByteArrayOutputStream())

.err(new ByteArrayOutputStream())

.option("engine.MaxIsolateMemory", "256MB")

.option("sandbox.MaxCPUTime", "2s")

.build()) {

context.eval("js", "print('Hello JavaScript!');");

}

Since Polyglot version 23.1, the isolated and untrusted policy also requires isolated images of the languages to be specified on the class or module path. Isolated versions of the languages can be downloaded from Maven using the following dependency:

<dependency>

<groupId>org.graalvm.polyglot</groupId>

<artifactId>js-isolate</artifactId>

<version>${graalvm.version}</version>

<type>pom</type>

</dependency>

The embedding guide contains more details on using polyglot isolate dependencies.

Untrusted Policy #

The UNTRUSTED sandboxing policy builds on top of the ISOLATED policy and is intended to mitigate risks from running actual untrusted code. The attack surface of GraalVM when running untrusted code consists of the entire guest VM that executes the code as well as the host entry points made available to guest code.

In addition to the restrictions of the ISOLATED policy, the UNTRUSTED policy:

- Requires redirection of the standard input stream.

- Requires setting the maximum memory consumption of the guest code. This is a limit in addition to the maximum isolate heap size backed by a mechanism that keeps track of the size of objects allocated by the guest code on the guest VM heap. This limit can be thought of as a “soft” memory limit, whereas the isolate heap size is the “hard” limit.

- Requires setting the maximum number of stack frames that can be pushed on the stack by guest code. This limit can protect against unbounded recursion to exhaust the stack.

- Requires setting the maximum AST depth of the guest code. Together with the stack frame limit, this puts a bound on the stack space consumed by guest code.

- Requires setting the maximum output and error stream sizes. As output and error streams have to be redirected, the receiving ends are on the host side. Limiting the output and error stream sizes protects against availability issues on the host.

- Requires untrusted code mitigations to be enabled. Untrusted code mitigations address risks from JIT spraying and speculative execution attacks. They include constant blinding as well as comprehensive use of speculative execution barriers.

- Further restricts host access to ensure there are no implicit entry points to host code. This means that guest-code access to host arrays, lists, maps, buffers, iterables and iterators is disallowed. The reason is that there may be various implementations of these APIs on the host side, resulting in implicit entry points. In addition, direct mappings of guest implementations to host interfaces via HostAccess.Builder#allowImplementationsAnnotatedBy are disallowed. The HostAccess.UNTRUSTED host access policy is preconfigured to fulfill the requirements for the UNTRUSTED sandboxing policy.

Example:

try (Context context = Context.newBuilder("js")

.sandbox(SandboxPolicy.UNTRUSTED)

.in(new ByteArrayInputStream("foobar".getBytes()))

.out(new ByteArrayOutputStream())

.err(new ByteArrayOutputStream())

.allowHostAccess(HostAccess.UNTRUSTED)

.option("engine.MaxIsolateMemory", "1024MB")

.option("sandbox.MaxHeapMemory", "128MB")

.option("sandbox.MaxCPUTime","2s")

.option("sandbox.MaxStatements","50000")

.option("sandbox.MaxStackFrames","2")

.option("sandbox.MaxThreads","1")

.option("sandbox.MaxASTDepth","10")

.option("sandbox.MaxOutputStreamSize","32B")

.option("sandbox.MaxErrorStreamSize","0B");

.build()) {

context.eval("js", "print('Hello JavaScript!');");

}

For further information on how to set the resource limits, please refer to the corresponding guidance.

Host Access #

GraalVM allows exchanging objects between host and guest code and exposing host methods to guest code. When exposing host methods to less privileged guest code, these methods become part of the attack surface of the more privileged host code. Therefore the sandboxing policies already restrict host access in the CONSTRAINED policy to make host entry points explicit.

HostAccess.CONSTRAINED is the predefined host access policy for the CONSTRAINED sandbox policy.

To expose a host class method, it has to be annotated with @HostAccess.Export.

This annotation is not inherited.

Service providers such as polyglot file system implementations or output stream recipients for standard output and error stream redirections are exposed to guest code invocations.

Guest code can also implement a Java interface that has been annotated with @Implementable.

Host code using such an interface directly interacts with guest code.

Host code that interacts with guest code has to be implemented in a robust manner:

- Input validation. All data passed from the guest, for example, via parameters to an exposed method, is untrusted and should be thoroughly validated by host code where applicable.

- Reentrancy. Exposed host code should be reentrant as guest code may invoke it at any time. Do note that simply applying the

synchronizedkeyword to a code block does not necessarily make it reentrant. - Thread-safety. Exposed host code should be thread-safe as guest code may invoke them from multiple threads at the same time.

- Resource consumption. Exposed host code should be aware of its resource consumption. In particular, constructs that allocate memory based on untrusted input data, either directly or indirectly, for example, through recursion, should either be avoided altogether or implement limits.

- Privileged functionality. Restrictions enforced by the sandbox can be entirely bypassed by exposing host methods that provide restricted functionality. For example, guest code with a CONSTRAINED sandbox policy cannot perform host file IO operations. However, exposing a host method to the context that allows writing to arbitrary files effectively bypasses this restriction.

- Side channels. Depending on the guest language, guest code may have access to timing information. For example, in Javascript the

Date()object provides fine-grained timing information. In the UNTRUSTED sandbox policy the granularity of Javascript timers is preconfigured to one second and can be lowered to 100 milliseconds. However, host code should be aware that guest code may time its execution, potentially discovering secret information if the host code performs secret-depending processing.

Host code that is unaware of interacting with untrusted guest code should never be directly exposed to guest code without taking the aforementioned aspects into account. As an example, an antipattern would be to implement a third party interface and forwarding all method invocations to guest code.

Resource Limits #

The ISOLATED and UNTRUSTED sandbox policies require setting resource limits for a context.

Different configurations can be provided for each context.

If a limit is exceeded, evaluation of the code fails and the context is canceled with a PolyglotException which returns true for isResourceExhausted().

At this point, no more guest code can be executed in the context

The --sandbox.TraceLimits option allows you to trace guest code and record the maximum resource utilization.

This can be used to estimate the parameters for the sandbox.

For example, a web server’s sandbox parameters could be obtained by enabling this option and either stress-testing the server, or letting the server run during peak usage.

When this option is enabled, the report is saved to the log file after the workload completes.

Users can change the location of the log file by using --log.file=<path> with a language launcher or -Dpolyglot.log.file=<path> when using a java launcher.

Each resource limit in the report can be passed directly to a sandbox option to enforce the limit.

See, for example, how to trace limits for a Python workload:

graalpy --log.file=limits.log --sandbox.TraceLimits=true workload.py

limits.log:

Traced Limits:

Maximum Heap Memory: 12MB

CPU Time: 7s

Number of statements executed: 9441565

Maximum active stack frames: 29

Maximum number of threads: 1

Maximum AST Depth: 15

Size written to standard output: 4B

Size written to standard error output: 0B

Recommended Programmatic Limits:

Context.newBuilder()

.option("sandbox.MaxHeapMemory", "2MB")

.option("sandbox.MaxCPUTime","10ms")

.option("sandbox.MaxStatements","1000")

.option("sandbox.MaxStackFrames","64")

.option("sandbox.MaxThreads","1")

.option("sandbox.MaxASTDepth","64")

.option("sandbox.MaxOutputStreamSize","1024KB")

.option("sandbox.MaxErrorStreamSize","1024KB")

.build();

Recommended Command Line Limits:

--sandbox.MaxHeapMemory=12MB --sandbox.MaxCPUTime=7s --sandbox.MaxStatements=9441565 --sandbox.MaxStackFrames=64 --sandbox.MaxThreads=1 --sandbox.MaxASTDepth=64 --sandbox.MaxOutputStreamSize=1024KB --sandbox.MaxErrorStreamSize=1024KB

Re-profiling may be required if the workload changes or when switching to a different major GraalVM version.

Certain limits can be reset at any point of time during the execution.

Limiting active CPU time #

The sandbox.MaxCPUTime option allows you to specify the maximum CPU time spent running guest code.

CPU time spent depends on the underlying hardware.

The maximum CPU time specifies how long a context can be active until it is automatically canceled and the context is closed.

By default the time limit is checked every 10 milliseconds.

This can be customized using the sandbox.MaxCPUTimeCheckInterval option.

As soon as the time limit is triggered, no further guest code can be executed with this context.

It will continuously throw a PolyglotException for any method of the polyglot context that will be invoked.

The used CPU time of a context includes time spent in callbacks to host code.

The used CPU time of a context typically does not include time spent waiting for synchronization or IO. The CPU time of all threads will be added and checked against the CPU time limit. This can mean that if two threads execute the same context then the time limit will be exceeded twice as fast.

The time limit is enforced by a separate high-priority thread that will be woken regularly. There is no guarantee that the context will be canceled within the accuracy specified. The accuracy may be significantly missed, for example, if the host VM causes a full garbage collection. If the time limit is never exceeded then the throughput of the guest context is not affected. If the time limit is exceeded for one context then it may slow down the throughput for other contexts with the same explicit engine temporarily.

Available units to specify time durations are ms for milliseconds, s for seconds, m for minutes, h for hours and d for days.

Both maximum CPU time limit and check interval must be positive followed by a time unit.

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxCPUTime", "500ms")

.build();) {

context.eval("js", "while(true);");

assert false;

} catch (PolyglotException e) {

// triggered after 500ms;

// context is closed and can no longer be used

// error message: Maximum CPU time limit of 500ms exceeded.

assert e.isCancelled();

assert e.isResourceExhausted();

}

Limiting the number of executed statements #

Specifies the maximum number of statements a context may execute until it is canceled.

After the statement limit was triggered for a context, it is no longer usable and every use of the context will throw a PolyglotException that returns true for PolyglotException.isCancelled().

The statement limit is independent of the number of threads executing.

The limit may be set to a negative number to disable it.

Whether this limit is applied internal sources only can be configured using sandbox.MaxStatementsIncludeInternal.

By default the limit does not include statements of sources that are marked internal.

If a shared engine is used then the same internal configuration must be used for all contexts of an engine.

The complexity of a single statement may not be constant time depending on the guest language.

For example, statements that execute Javascript builtins, like Array.sort, may account for a single statement, but its execution time is dependent on the size of the array.

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxStatements", "2")

.option("sandbox.MaxStatementsIncludeInternal", "false")

.build();) {

context.eval("js", "purpose = 41");

context.eval("js", "purpose++");

context.eval("js", "purpose++"); // triggers max statements

assert false;

} catch (PolyglotException e) {

// context is closed and can no longer be used

// error message: Maximum statements limit of 2 exceeded.

assert e.isCancelled();

assert e.isResourceExhausted();

}

AST depth limit #

A limit on the maximum expression depth of a guest language function. Only instrumentable nodes count towards the limit.

The AST depth can give an estimate of the complexity of a function as well as its stack frame size.

Limiting the number of stack frames #

Specifies the maximum number of frames a context can push on the stack. A thread-local stack frame counter is incremented on function enter and decremented on function return.

The stack frame limit in itself serves as a safeguard against infinite recursion. Together with the AST depth limit it can restrict total stack space usage.

Limiting the number of active threads #

Limits the number of threads that can be used by a context at the same point in time. Multithreading is not supported in the UNTRUSTED sandbox policy.

Heap memory limits #

The sandbox.MaxHeapMemory option specifies the maximum heap memory guest code is allowed to retain during its run.

Only objects residing in guest code count towards the limit - memory allocated during callbacks to host code does not.

This is not a hard limit as the efficacy of this option (also) depends on the garbage collector used.

This means that the limit may be exceeded by guest code.

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxHeapMemory", "100MB")

.build()) {

context.eval("js", "var r = {}; var o = r; while(true) { o.o = {}; o = o.o; };");

assert false;

} catch (PolyglotException e) {

// triggered after the retained size is greater than 100MB;

// context is closed and can no longer be used

// error message: Maximum heap memory limit of 104857600 bytes exceeded. Current memory at least...

assert e.isCancelled();

assert e.isResourceExhausted();

}

The limit is checked by retained size computation triggered either based on allocated bytes or on low memory notification.

The allocated bytes are checked by a separate high-priority thread that will be woken regularly.

There is one such thread for each memory-limited context (one with sandbox.MaxHeapMemory set).

The retained bytes computation is done by yet another high-priority thread that is started from the allocated bytes checking thread as needed.

The retained bytes computation thread also cancels the context if the heap memory limit is exceeded.

Additionally, when the low memory trigger is invoked, all contexts on engines with at least one memory-limited context are paused together with their allocation checkers.

All individual retained size computations are canceled.

Retained bytes in the heap for each memory-limited context are computed by a single high-priority thread.

The heap memory limit will not prevent the context from causing OutOfMemory errors.

Guest code that allocates many objects in quick succession has a lower accuracy compared to code that allocates objects rarely.

Retained size computation for a context can be customized using the expert options sandbox.AllocatedBytesCheckInterval, sandbox.AllocatedBytesCheckEnabled, sandbox.AllocatedBytesCheckFactor, sandbox.RetainedBytesCheckInterval, sandbox.RetainedBytesCheckFactor, and sandbox.UseLowMemoryTrigger described below.

Retained size computation for a context is triggered when a retained bytes estimate exceeds a certain factor of specified sandbox.MaxHeapMemory.

The estimate is based on heap memory

allocated by threads where the context has been active.

More precisely, the estimate is the result of previous retained bytes computation, if available, plus bytes allocated since the start of the previous computation.

By default the factor of sandbox.MaxHeapMemory is 1.0 and it can be customized by the sandbox.AllocatedBytesCheckFactor option.

The factor must be positive.

For example, let sandbox.MaxHeapMemory be 100MB and sandbox.AllocatedBytesCheckFactor be 0.5.

The retained size computation is first triggered when allocated bytes reach 50MB.

Let the computed retained size be 25MB, then the next retained size computation is triggered when additional 25MB is allocated, etc.

By default, allocated bytes are checked every 10 milliseconds. This can be configured by sandbox.AllocatedBytesCheckInterval.

The smallest possible interval is 1ms. Any smaller value is interpreted as 1ms.

The beginnings of two retained size computations of the same context must be by default at least 10 milliseconds apart.

This can be configured by the sandbox.RetainedBytesCheckInterval option. The interval must be positive.

The allocated bytes checking for a context can be disabled by the sandbox.AllocatedBytesCheckEnabled option.

By default it is enabled (“true”). If disabled (“false”), retained size checking for the context can be triggered only by the low memory trigger.

When the total number of bytes allocated in the heap for the whole host VM exceeds a certain factor of the total heap memory of the VM, low memory notification is invoked and initiates the following process.

The execution for all engines with at least one execution context which has the sandbox.MaxHeapMemory option set is paused, retained bytes in the heap for each memory-limited context are computed, contexts exceeding their limits are canceled, and then the execution is resumed.

The default factor is 0.7. This can be configured by the sandbox.RetainedBytesCheckFactor option.

The factor must be between 0.0 and 1.0. All contexts using the sandbox.MaxHeapMemory option must use the same value for sandbox.RetainedBytesCheckFactor.

When the usage threshold or the collection usage threshold of any heap memory pool has already been set, then the low memory trigger cannot be used by default, because the limit specified by the sandbox.RetainedBytesCheckFactor cannot be implemented.

However, when sandbox.ReuseLowMemoryTriggerThreshold is set to true and the usage threshold or the collection usage threshold of a heap memory pool has already been set, then the value of sandbox.RetainedBytesCheckFactor is ignored for that memory pool and whatever limit has already been set is used.

That way the low memory trigger can be used together with libraries that also set the usage threshold or the collection usage threshold of heap memory pools.

The described low memory trigger can be disabled by the sandbox.UseLowMemoryTrigger option.

By default it is enabled (“true”). If disabled (“false”), retained size checking for the execution context can be triggered only by the allocated bytes checker.

All contexts using the sandbox.MaxHeapMemory option must use the same value for sandbox.UseLowMemoryTrigger.

Limiting the amount of data written to standard output and error streams #

Limits the size of the output that guest code writes to standard output or standard error output during runtime. Limiting the size of the output can serve as protection against denial-of-service attacks that flood the output.

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxOutputStreamSize", "100KB")

.build()) {

context.eval("js", "while(true) { console.log('Log message') };");

assert false;

} catch (PolyglotException e) {

// triggered after writing more than 100KB to stdout

// context is closed and can no longer be used

// error message: Maximum output stream size of 102400 exceeded. Bytes written 102408.

assert e.isCancelled();

assert e.isResourceExhausted();

}

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxErrorStreamSize", "100KB")

.build()) {

context.eval("js", "while(true) { console.error('Error message') };");

assert false;

} catch (PolyglotException e) {

// triggered after writing more than 100KB to stderr

// context is closed and can no longer be used

// error message: Maximum error stream size of 102400 exceeded. Bytes written 102410.

assert e.isCancelled();

assert e.isResourceExhausted();

}

Resetting Resource Limits #

It is possible to reset the limits at any point in time using the Context.resetLimits method.

This can be useful if a known and trusted initialization script should be excluded from limit.

Only the statement, cpu time and output / error stream limits can be reset.

try (Context context = Context.newBuilder("js")

.option("sandbox.MaxCPUTime", "500ms")

.build();) {

context.eval("js", /*... initialization script ...*/);

context.resetLimits();

context.eval("js", /*... user script ...*/);

assert false;

} catch (PolyglotException e) {

assert e.isCancelled();

assert e.isResourceExhausted();

}

Runtime Defenses #

The main defense enforced by the ISOLATED and UNTRUSTED sandbox policy through the engine.SpawnIsolate option is that the Polyglot engine runs in a dedicated native-image isolate, moving execution of guest code to a VM-level fault domain separate from the host application, with its own heap, garbage collector and JIT compiler.

Apart from setting a hard limit for the memory consumption of guest code via the guest’s heap size, it also allows to focus runtime defenses just on guest code and not cause performance degradation of host code.

The runtime defenses are enabled by the engine.UntrustedCodeMitigation option.

Constant Blinding #

JIT compilers allow users to provide source code and, given the source code is valid, compile it to machine code. From an attacker’s perspective, JIT compilers compile attacker-controlled inputs to predictable bytes in executable memory. In an attack called JIT spraying an attacker leverages the predictable compilation by feeding malicious input programs into the JIT compiler, thereby forcing it to emit code containing Return-Oriented Programming (ROP) gadgets.

Constants in the input program are a particularly attractive target for such an attack, since JIT compilers often include them verbatim in the machine code. Constant blinding aims to invalidate an attacker’s predictions by introducing randomness into the compilation process. Specifically, constant blinding encrypts constants with a random key at compile time and decrypts them at runtime at each occurrence. Only the encrypted version of the constant appears verbatim in the machine code. Absent knowledge of the random key, the attacker cannot predict the encrypted constant value and, therefore, can no longer predict the resulting bytes in executable memory.

GraalVM blinds all immediate values and data embedded in code pages of runtime compiled guest code down to a size of four bytes.

Randomized Function Entry Points #

A predictable code layout makes it easier for attackers to find gadgets that have been introduced, for example, via the aforementioned JIT spray attack. While runtime compiled methods are already placed in memory that is subject to address space layout randomization (ASLR) by the operating system, GraalVM additionally pads the starting offset of functions with a random number of trap instructions.

Speculative Execution Attack Mitigations #

Speculative execution attacks such as Spectre exploit the fact that a CPU may transiently execute instructions based on branch prediction information. In the case of a misprediction, the result of these instructions is discarded. However, the execution may have caused side effects in the micro-architectural state of a CPU. For example, data may have been pulled into the cache during transient execution - a side-channel that can be read by timing data access.

GraalVM protects against Spectre attacks by inserting speculative execution barrier instructions in runtime compiled guest code to prevent attackers from crafting speculative execution gadgets. A speculative execution barrier is placed at each target of a conditional branch that is relevant to Java memory safety to stop speculative execution.

Sharing Execution Engines #

Guest code of different trust domains has to be separated at the Polylgot engine level, that is, only guest code of the same trust domain should share an engine. When multiple context share an engine, all of them must have the same sandbox policy (the engine’s sandbox policy). Application developers may choose to share execution engines among execution contexts for performance reasons. While the context holds the state of the executed code, the engine holds the code itself. Sharing of an execution engine among multiple contexts needs to be set up explicitly and can increase performance in scenarios where a number of contexts execute the same code. In scenarios where contexts that share an execution engine for common code also execute sensitive (private) code, the corresponding source objects can opt out from code sharing with:

Source.newBuilder(…).cached(false).build()

Compatibility and Limitations #

Polyglot sandboxing is not available in GraalVM Community Edition.

Depending on the sandboxing policy, only a subset of Truffle languages, instruments, and options are available. In particular, sandboxing is currently only supported for the runtime’s default version of ECMAScript (ECMAScript 2022). Sandboxing is also not supported from within GraalVM’s Node.js.

Polyglot sandboxing is not compatible with modifications to the VM setup via (for example) system properties that change the behavior of the VM.

The sandboxing policy is subject to incompatible changes across major GraalVM releases to maintain a secure-by-default posture.

Polyglot sandboxing cannot protect against vulnerabilities in its operating environment, such as vulnerabilities in the operating system or the underlying hardware. We recommend to adopt the appropriate external isolation primitives to protect against corresponding risks.

Differentiation with Java Security Manager #

The Java Security Manager is deprecated in Java 17 with JEP-411. The purpose of the security manager is stated as follows: “It allows an application to determine, before performing a possibly unsafe or sensitive operation, what the operation is and whether it is being attempted in a security context that allows the operation to be performed.”

The goal of the GraalVM sandbox is to allow the execution of untrusted guest code in a secure manner, meaning untrusted guest code should not be able to compromise the confidentiality, integrity or availability of the host code and its environment.

The GraalVM sandbox differs from Security Managers in the following aspects:

- Security boundary: The Java Security Manager features a flexible security boundary that depends on the actual calling context of a method. This makes “drawing the line” complex and error prone. A security-critical code block first needs to inspect the current calling stack to determine whether all frames on the stack have the authority to invoke the code. In the GraalVM sandbox, there is a straightforward, clear security boundary: the boundary between host and guest code, with guest code running on top of the Truffle framework, similar to how typical computer architectures distinguish between user mode and (privileged) kernel mode.

- Isolation: With the Java Security Manager, privileged code is almost on “equal footing” as untrusted code with respect to the language and runtime:

- Shared language: With the Java Security Manager, untrusted code is written in the same language as privileged code, with the advantage of straightforward interoperability between the two. In contrast, the GraalVM sandbox a guest application written in a Truffle language needs to pass an explicit boundary to host code written in Java.

- Shared runtime: With the Java Security Manager, untrusted code executes in the same JVM environment as trusted code, sharing JDK classes and runtime services such as the garbage collector or the compiler. In the GraalVM sandbox, untrusted code runs in dedicated VM instances (GraalVM isolates), separating services and JDK classes of host and guest by design.

- Resource limits: The Java Security Manager cannot restrict the usage of computational resources such as CPU time or memory, allowing untrusted code to DoS the JVM. The GraalVM sandbox offers controls to set limits on several computational resources (CPU time, memory, threads, processes), guest code may consume to address availability concerns.

- Configuration: Crafting a Java Security Manager policy was often found to be a complex and error-prone task, requiring a subject matter expert that knows exactly which parts of the program require what level of access. Configuring the GraalVM sandbox provides security profiles that focus on common sandboxing use cases and threat models.

Reporting vulnerabilities #

If you believe you have found a security vulnerability, please submit a report to secalert_us@oracle.com preferably with a proof of concept. Please refer to Reporting Vulnerabilities for additional information including our public encryption key for secure email. We ask that you do not contact project contributors directly or through other channels about a report.